Crash Course in Scientific Computing

VI. Numbers

Numbers are ultimately what computers deal with, and their classification is a bit different than the one we have seen in Platonic Mathematics where we introduced the big family of numbers as $\mathbb{N}$, $\mathbb{Z}$, $\mathbb{Q}$, $\mathbb{R}$ and $\mathbb{C}$. The basic numbers are bits, which were the starting point of the course. We have seen already how integers derive from them by grouping several bits, basically changing from base 2 to base 10 or base 16 (hexadecimal). There is much more to the story, as can be seen from the following line of code we used in our definition of the Collatz sequence:

if n%2==0

n÷=2

else

n=3n+1

end

Note that we used integer division, which turns the even integer, e.g., 42, into another integer:

julia> 42÷2 21

Compare this to standard division:

julia> 42/2 21.0

The result looks the same, but these are actually different types of numbers. Julia offers typeof() to tell us about the so-called "type" of the object:

julia> typeof(21) Int64 julia> typeof(21.0) Float64

That also works with other variables:

julia> typeof("hi")

String

julia> typeof('!')

Char

but today we are concerned with numbers. The different types refer to which set of values a variable of this type can take and how many bits are required to encode it. It can be Int (for integer, or whole number, a subset of $\mathbb{Z}$) in which case it can also be U (i.e., UInt) for unsigned (a subset of $\mathbb{N}$) or Float (for floating-point, or rational, a subset of $\mathbb{Q}$. The number of bits is given in the type: UInt128 is a signed integer with 128 bits and Float64 is floating-point with 64 bits. There are symbolic supports for higher types as we shall see later on.

Depending on the architecture of your microprocessor, the basic Int is defined as Int32 (32-bits architecture) or Int64. You can figure it out by asking:

julia> Int Int64

Even if you work on a 32-bits architecture, if you define numbers that don't fit in 32 bits, they will be encoded with 64 bits. If you go beyond 64 bits, you get an overflow:

julia> 2^64 0

The command bitstring() gives you how a number is encoded as bits. Here is the computer counting up to 3:

julia> [bitstring(i) for i∈0:3]

4-element Array{String,1}:

"0000000000000000000000000000000000000000000000000000000000000000"

"0000000000000000000000000000000000000000000000000000000000000001"

"0000000000000000000000000000000000000000000000000000000000000010"

"0000000000000000000000000000000000000000000000000000000000000011"

A lot of bits for nothing (1st line).

Julia by default use hexadecimal for unsigned integers:

a=0xa typeof(a)

You can convert that back into decimals by mixing with a neutral element (0 for addition, 1 for multiplication), but this changes the type:

julia> 1*0xa 10 julia> typeof(ans) Int64

The unsigned values of a variable range from $0$ to $2^{N}-1$ (the $-1$ because of $0$), while if it's signed, one bit must go to the sign information which reduces the range to $-2^{N-1}$ to $2^{N-1}-1$: there are more negative numbers than positive!

so the maximum value for the default integer (on a 64-bits architecture) is thus $2^{63}-1=9\,223\,372\,036\,854\,775\,807$ or about $9\times10^{18}$, nine quintillions. That's a bit number: a million million million, or a billion billion. We can get this number from:

julia> typemax(Int64) 9223372036854775807

or, equivalently:

julia> 2^63-1 9223372036854775807

If, however, we ask for

julia> 2^63 -9223372036854775808

This is called an overflow. On the positive side (no pun intended), UInt bring us farther but Julia encodes them in hexadecimal, understanding that if they have no signs, they behave as computer pointers, or addresses. Therefore:

julia> typemax(UInt) 0xffffffffffffffff

and

julia> 0xffffffffffffffff+1 0x0000000000000000

Overflow again, although there is a UInt128 type, the computer doesn't refer to them automatically. Unfortunately, it appears one can't force the machine to do it either, as still, not yet:

julia> x::UInt128=0xffffffffffffffff ERROR: syntax: type declarations on global variables are not yet supported

so one has to find a way out:

julia> 0x0ffffffffffffffff 0x0000000000000000ffffffffffffffff julia> typeof(0x0ffffffffffffffff) UInt128

in which case the arithmetics works again:

julia> 0x0ffffffffffffffff+1 0x00000000000000010000000000000000

at a cost to memory though:

julia> bitstring(0xffffffffffffffff) "1111111111111111111111111111111111111111111111111111111111111111" julia> bitstring(0x0ffffffffffffffff+1) "00000000000000000000000000000000000000000000000000000000000000010000000000000000000000000000000000000000000000000000000000000000"

How could we figure out which number is

julia> typemax(UInt128) 0xffffffffffffffffffffffffffffffff

There are two ways, a lawful one and a sophisticated one (There's also a cunning way; you can team up with the computer. This is twice the following:

julia> typemax(Int128) 170141183460469231731687303715884105727

)

We start with the lawful one, turning to Floats.

Floats are the type of so-called "floating-point arithmetic", which is a beautiful expression to refer to decimal numbers, such as 1.4142135623730951 (a computer approximation to $\sqrt{2}$. When dealing with such computer-abstraction of real numbers, one has to worry about the range (how far one can go in the big numbers $|x|\gg1$) and precision (how far one can go in the small numbers $|x|\ll1$).

https://en.wikipedia.org/wiki/Radix_point

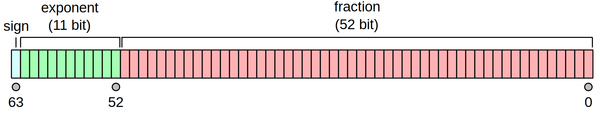

The widely accepted convention is that defined by the IEEE 754 double precision floating point number.

- The 1-bit sign is 0 for positive, 1 for negative. Simple (we started the course with this fact).

- The 11-bits exponent can take $2^{11}=2048$ values, with an offset of 1023 to cover both positive and negative powers:

- 0 subnormal numbers.

- $1\to 2^{-1023+1}=2^{-1022}\approx 2\times10^{-308}$

- $2\to 2^{-1023+2}=2^{-1023}\approx 4\times10^{-308}$

- ...

- $k$ with $1\le k\le2047\to 2^{-1023+k}$

- ...

- $1023\to 2^0=1$

- ...

- $2047\to 2^{-1023+2047}=2^{1024}\approx 2\times10^{308}$

- $2048\to $ special numbers: NaN and $\infty$.

- The 52-bits mantissa (or significand, or precision), give the value which is to be scaled by the powers:

$$(-1)^{\text{sign}}(1.b_{51}b_{50}...b_{0})_{2}\times 2^{\text{exponent}-1023}$$

or

$$(-1)^{\text{sign}}\left(1+\sum _{i=1}^{52}b_{52-i}2^{-i}\right)\times 2^{\text{exponent}-1023}$$

When encoding numbers in binary, there is an interesting oddity, that one gets a bit for free, namely, the leading bit, which is always 1 whatever the number (except for 0). Indeed, 0.75, for instance, is $7.5\times 10^{-1}$ in decimal floating point notation and while it's the number 7 (that could have been 3 if this was 0.3) in base 10, in base 2, this always become a 1 since by definition one brings the first one to leading figure, therefore it is always redundant (except if, again, for the one-number zero)

- $0.75\to 0.11=1.1_2\times 2^{-1}=\left(1+{1\over2}\right)\times2^{-1}$

- $\begin{align}0.00237&\to0.0000000010011011010100100000000001111101110101000100000100111\dots\\&=1.0011011010100100000000001111101110101000100000100111\dots_2\times 2^{-9})\\&=1+\left(1+{1\over2^3}+{1\over2^4}+{1\over2^6}+{1\over2^7}+\dots+{1\over2^{50}}+{1\over2^{51}}+{1\over2^{52}}+\dots\right)\times2^{-1}\end{align}$

Of course this remains true for numbers $>1$:

so one do not need to store this bit.

flops

FPU

floating point numbers allow the radix to move ("to float") as opposed to fixed-point arithmetics where this position is fixed, e.g.,

there are various names, including the significand, mantissa, or coefficient

The sophisticated way to overcome Int128 is to turn to their generalization, known as BigInt (we're not even counting in terms of bits anymore).

This is a beautiful "computer equation":

julia> typemax(UInt128)==big(2)^128-1 true

(we could also use 2^big(64)). This shows us that the biggest unsigned integer is $340\,282\,366\,920\,938\,463\,463\,374\,607\,431\,768\,211\,455\approx 10^{38}$ about 340 undecillion (just short of a duodecillion).

- float, or single-precision, 32 bits, precision of 24 bits (about 7 decimals). This describes correctly all integers who absolute value is less than $2^24$.

- double (double-precision) has 64 bits with a precision of 53 bits (about 16 decimals). This describes correctly all integers who absolute value is less than $2^53$.

An ugly computer equation:

julia> 2.0^53+1==2.0^53 true

There are curiosities in the encoding, like the existence of a negative zero, which is equal to zero but not in all operations:

julia> bitstring(0.0) "0000000000000000000000000000000000000000000000000000000000000000" julia> bitstring(-0.0) "1000000000000000000000000000000000000000000000000000000000000000" julia> 0.0==-.00 true julia> 1/0.0, 1/-0.0 (Inf, -Inf)

When the calculation brings the result below the smallest number that can be encoded, one speaks of an underflow.

The arithmetical difference between two consecutive representable floating-point numbers which have the same exponent is called a unit in the last place (ULP).

function reversebitstring(::Type{T}, str::String) where {T<:Base.IEEEFloat}

unsignedbits = Meta.parse(string("0b", str))

thefloat = reinterpret(T, unsignedbits)

return thefloat

end

reversebitstring(Float64, "0100000001011110110111010010111100011010100111111011111001110111")

Rounding

more with https://en.wikibooks.org/wiki/Introducing_Julia/The_REPL

ou can initialize an empty Vector of any type by typing the type in front of []. Like:

Float64[] # Returns what you want

Array{Float64, 2}[] # Vector of Array{Float64,2}

Any[] # Can contain anything