m (→XVIII. Fitting) |

m |

||

| Line 3: | Line 3: | ||

We have seen that interpolation works great theoretically but can lead to unpleasant results. Let alone Runge's phenomenon, we certainly do not want to constrain our model to pass by all points, taking into account irrelevant and meaningless fluctuations, noise, etc. While this is theoretically possible if we provide sufficient degrees of freedom, this is probably meaningless. What we typically want, instead, is the best overall agreement, without stretching too far our underlying model, which usually comes with its fixed number of degrees of freedom anyway. This problem is that of ''fitting'', which is of extreme importance in most quantitative science. | We have seen that interpolation works great theoretically but can lead to unpleasant results. Let alone Runge's phenomenon, we certainly do not want to constrain our model to pass by all points, taking into account irrelevant and meaningless fluctuations, noise, etc. While this is theoretically possible if we provide sufficient degrees of freedom, this is probably meaningless. What we typically want, instead, is the best overall agreement, without stretching too far our underlying model, which usually comes with its fixed number of degrees of freedom anyway. This problem is that of ''fitting'', which is of extreme importance in most quantitative science. | ||

| − | |||

| − | |||

Say that we have $N$ data points $y_1$, ..., $y_N$ obtained for the values $x_1$, ..., $x_N$ that we expect are accounted for by the "model" $f$, i.e., a function $f(x,\vec\beta)$ of $\nu$ parameters $\beta_1$, ..., $\beta_\nu$ kept in a vector $\vec\beta$: | Say that we have $N$ data points $y_1$, ..., $y_N$ obtained for the values $x_1$, ..., $x_N$ that we expect are accounted for by the "model" $f$, i.e., a function $f(x,\vec\beta)$ of $\nu$ parameters $\beta_1$, ..., $\beta_\nu$ kept in a vector $\vec\beta$: | ||

| Line 125: | Line 123: | ||

which is also exact, but remains so even without the computer. These formulas are used by most people as coming from the theory black box. Now you know where this comes from. | which is also exact, but remains so even without the computer. These formulas are used by most people as coming from the theory black box. Now you know where this comes from. | ||

| − | + | Like we did for interpolation, let us now progress from the linear case to polynomials. For interpolation, we had the strong result that a polynomial of order $N-1$ could fit $N$ data points exactly. There is a stronger result for fitting with polynomials, known as the [https://en.wikipedia.org/wiki/Stone%E2%80%93Weierstrass_theorem Weierstrass Approximation Theorem], which states that every continuous function defined on a closed interval can be uniformly approximated as closely as desired by a polynomial function. This shows again how fitting overcomes interpolation, which breaks down in the hand of Runge's phenomenon. Weierstrass shows, on the other hand, that polynomials are good enough to get arbitrarily close, as long as we do not demand them to be exact on particular values. | |

| + | |||

| + | The result also follows from the general theory, in which case: | ||

$$f(x;\beta)\equiv\sum_{k=0}^N\beta_k x^k$$ | $$f(x;\beta)\equiv\sum_{k=0}^N\beta_k x^k$$ | ||

| Line 138: | Line 138: | ||

}$$ | }$$ | ||

| − | Let us | + | Namely, for quadratic fitting, this reads: |

| + | |||

| + | $$\Phi=\pmatrix{ | ||

| + | 1 & x_1 & x_1^2 \\ | ||

| + | 1 & x_2 & x_2^2 \\ | ||

| + | &\vdots & \\ | ||

| + | 1 & x_N & x_N^2 | ||

| + | }$$ | ||

| + | |||

| + | so that | ||

| + | |||

| + | $$\vec\beta | ||

| + | =(\Phi^\dagger\Phi)^{-1}\Phi^\dagger\vec y=\pmatrix{ | ||

| + | N & \sum_{i=1}^N x_i & \sum_{i=1}^N x_i^2 \\ | ||

| + | \sum_{i=1}^N x_i & \sum_{i=1}^N x_i^2 & \sum_{i=1}^N x_i^3 \\ | ||

| + | \sum_{i=1}^N x_i^2 & \sum_{i=1}^N x_i^3 & \sum_{i=1}^N x_i^4 | ||

| + | }^{-1}\pmatrix{\sum_{i=1}^N y_i \\ \sum_{i=1}^N y_i x_i \\ \sum_{i=1}^N y_i x_i^2} | ||

| + | $$ | ||

| + | |||

| + | which, being $3\times3$, can be inverted formally, to produce exact results. It is more convenient, however, to have the computation performed numerically. Let us turn to the computer, to best interpolate quadratically, say: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | lx=[1, 2, 3, 5, 7]; | ||

| + | ly=[3, 2, 2, 4, 11] | ||

| + | scatter(lx, ly, legend=false, xlabel="x", ylabel="y") | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | <center><wz tip="Parabola-looking points.">[[File:Screenshot_20220404_135832.png|400px]]</wz></center> | ||

| + | |||

| + | It looks reasonable that one will get a good quadratic fit to such data (and clearly not with a mere linear fit). We provide directly $\Phi^\dagger\Phi$: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | ΦdΦ=[length(lx) sum(lx) sum(lx.^2); | ||

| + | 0 sum(lx.^2) sum(lx.^3); | ||

| + | 0 0 sum(lx.^4)] | ||

| + | ΦdΦ[2,1]=ΦdΦ[1,2]; | ||

| + | ΦdΦ[3,1]=ΦdΦ[1,3]; | ||

| + | ΦdΦ[3,2]=ΦdΦ[2,3]; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | we now have our matrix: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | ΦdΦ | ||

| + | 3×3 Array{Int64,2}: | ||

| + | 5 18 88 | ||

| + | 18 88 504 | ||

| + | 88 504 3124 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | and its numerical inverse: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | inv(ΦdΦ) | ||

| + | 3×3 Array{Float64,2}: | ||

| + | 2.78465 -1.58316 0.176972 | ||

| + | -1.58316 1.04957 -0.124733 | ||

| + | 0.176972 -0.124733 0.0154584 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | and thus the best-fitting parameters: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | beta=inv(ΦdΦ)*[sum(ly), sum(ly.*lx), sum(ly.*(lx.^2))] | ||

| + | 3-element Array{Float64,1}: | ||

| + | 5.33262260127934 | ||

| + | -2.6982942430703787 | ||

| + | 0.49893390191898135 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | which provide the best quadratic fit: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | plot!(x->beta[1]+beta[2]*x+beta[3]*x^2, 0, 8) | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | <center><wz tip="Best quadratic fit to the data.">[[File:Screenshot_20220404_144111.png|400px]]</wz></center> | ||

| + | |||

| + | This is how close we manage to get: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | (x->beta[1]+beta[2]*x+beta[3]*x^2).(lx) | ||

| + | 5-element Array{Float64,1}: | ||

| + | 3.1332622601279425 | ||

| + | 1.9317697228145079 | ||

| + | 1.728144989339036 | ||

| + | 4.31449893390198 | ||

| + | 10.892324093816775 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | to the sought values: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | ly | ||

| + | 5-element Array{Int64,1}: | ||

| + | 3 | ||

| + | 2 | ||

| + | 2 | ||

| + | 4 | ||

| + | 11 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | with total squares of residuals: | ||

| + | |||

| + | <syntaxhighlight lang="python"> | ||

| + | sum(((x->beta[1]+beta[2]*x+beta[3]*x^2).(lx)-ly).^2) | ||

| + | 0.2068230277185501 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | The statement is that no over parabola is such that the corresponding square distances get below this value. We have involved a numerical inverse. If we had recoursed to the exact result, we would have found the best parabola to be: | ||

| + | |||

| + | $$f(x)={2501\over469}-{2531\over938}x+{234\over469}x^2$$ | ||

| + | |||

| + | with total squares of residuals $97/469$ which evaluates to the same numerical value that we have found above. | ||

| + | |||

| + | We now generalize the procedure to higher-order polynomials. This piece of code can do it for the general case: | ||

| − | |||

| − | We conclude with fitting with functions other than polynomials. In computer science, they typically provide enough generality, but in physics, many functional dependencies are of a different type. For instance, it is customary to encounter exponential | + | We conclude with fitting with functions other than polynomials. In computer science, they typically provide enough generality, but in physics, many functional dependencies are of a different type. For instance, it is customary to encounter exponential behavior (which requires an infinite number of polynomials). |

One can use the linear squares rigorously as long as the coefficients $\beta$ are linear. Sometimes it could appear there is a clever trick to extend its reach of action. Say we have data distributed according to (lifetimes of a radioactive sample of size $N(0)$): | One can use the linear squares rigorously as long as the coefficients $\beta$ are linear. Sometimes it could appear there is a clever trick to extend its reach of action. Say we have data distributed according to (lifetimes of a radioactive sample of size $N(0)$): | ||

Revision as of 12:56, 4 April 2022

Crash Course in Scientific Computing

XVIII. Fitting

We have seen that interpolation works great theoretically but can lead to unpleasant results. Let alone Runge's phenomenon, we certainly do not want to constrain our model to pass by all points, taking into account irrelevant and meaningless fluctuations, noise, etc. While this is theoretically possible if we provide sufficient degrees of freedom, this is probably meaningless. What we typically want, instead, is the best overall agreement, without stretching too far our underlying model, which usually comes with its fixed number of degrees of freedom anyway. This problem is that of fitting, which is of extreme importance in most quantitative science.

Say that we have $N$ data points $y_1$, ..., $y_N$ obtained for the values $x_1$, ..., $x_N$ that we expect are accounted for by the "model" $f$, i.e., a function $f(x,\vec\beta)$ of $\nu$ parameters $\beta_1$, ..., $\beta_\nu$ kept in a vector $\vec\beta$:

$$r_i=f(x_i,\vec\beta)-y_i\,.$$

A good way to fit our data with the model is by minimizing the sum of the squared residuals (how much is left out by the model from the actual data):

$$S=\sum_{i=1}^N r_i^2\,.$$

We take the square as otherwise, positive and negative residuals would cancel without providing an exact fit. To find the optimum, that is, smallest, sum of squares, we vary $\beta$s such that $S$ is minimum, which is achieved when all $\partial S/\partial\beta_j$ terms for $1\le j\le\nu$ are canceled simultaneously:

$$\frac{\partial S}{\partial\beta_j}=2\sum_{i=1}^N(f(x_i,\vec\beta)-y_i)\frac{\partial f(x_i,\vec\beta)}{\partial\beta_j}=0\,,\quad\text{for $j=1,\dots,\nu$}\,.\tag{1}$$

This is a complicated problem in general, that typically requires iterations over trials and errors.

There is a case where Eq. (1) is considerably simplified, namely, if the partial derivative can be cancelled out, which occurs for so-called linear least squares where the parameters $\beta$ are merely linear coefficients of functions (that can be, of course, nonlinear). That is to say, our test function reads:

$$f(x;\beta)=\sum_{k=1}^\nu\beta_k\phi_k(x)\,,$$

in which case:

$$\frac{\partial f(x_i;\beta)}{\partial\beta_j}=\phi_j(x_i)\,.$$

We put this back in each term of the differential in $\beta$:

$$\frac{\partial S}{\partial\beta_j}=2\sum_{i=1}^N\left(\left[\sum_{k=1}^\nu\beta_k\phi_k(x_i)\right]-y_i\right)\phi_j(x_i)=0$$

and now, it remains to rearrange this equality so that we can write it in matrix form. To do so, we have to remember these properties (and notations):

Concatenation:

$$\sum_k A_{ik}B_{kj}=(AB)_{ij}$$

Transposition:

$$A^T_{ij}=A_{ji}$$

so that our equation above becomes:

$$\sum_{i=1}^N\sum_{k=1}^\nu\beta_k\phi_k(x_i)\phi_j(x_i)=\sum_{i=1}^N y_i\phi_j(x_i)\,,$$

We introduce the matrix $\Phi$ such that:

$$\Phi_{ij}=\phi_j(x_i)\tag{2}\,,$$

in which case, with some further grouping and reassembling:

$$\sum_{k=1}^\nu\sum_{i=1}^N\Phi^T_{ji}\Phi_{ik}\beta_k=\sum_{i=1}^N \Phi_{ij}y_i\,,$$

So that we can get rid of the $i$ indices:

$$\sum_{k=1}^\nu(\Phi^T\Phi)_{jk}\beta_k=(\Phi^T y)_j\,,$$

where $y=(y_1,\dots,y_N)^T$, and now getting rid of $k$:

$$[(\Phi^T\Phi)\beta]_j=(\Phi^T y)_j\,,$$

with $\beta$ our vector of unknown parameters. As the equality holds for all $1\le j\le\nu$, it holds for the full vector, providing us with the final equation in its matrix form:

$$(\Phi^T\Phi)\vec\beta=\Phi^T y\,.$$

Assuming $(\Phi^T\Phi)$ is well conditioned (in particular, not singular), we get the formal solution by brute force inversion:

$$\vec\beta=(\Phi^T\Phi)^{-1}\Phi^T y\,.$$

The $(\Phi^T\Phi)^{-1}\Phi^T$ could be problematic if large (many fitting functions and/or many data points) and actually computed in this form, in which case one can see it as the notation for a so-called "pseudo-inverse" that deals with such an object in an efficient and stable way.

Let us now turn from the general theory to particular cases of interest.

By far, the most famous and used type of fitting is with a linear function, of the type:

$$f(x;\beta)=\beta_0+x\beta_1\,.$$

This is known as linear regression. In this case, our matrix become simply:

$$\Phi^T\Phi=\pmatrix{ 1 & 1 & 1 & \dots\\ x_1 & x_2 & x_3 & \dots }\pmatrix{ 1 & x_1 \\ 1 & x_2 \\ 1 & x_3 \\ \vdots & \vdots } = \pmatrix{ N & \sum x_i\\ \sum x_i & \sum x_i^2 }\,,$$

The inverse of a $2\times2$ matrix is the inverse of its determinant times the matrix where diagonal elements are swapped and off-diagonal ones are changed sign, so:

$$(\Phi^T\Phi)^{-1} = \frac{1}{N\sum x_i^2-(\sum x_i)^2} \pmatrix{ \sum x_i^2 & -\sum x_i\\ -\sum x_i & N }\,, $$

and since:

$$\Phi^T y=\pmatrix{ \sum y_i \\ \sum x_iy_i }$$

this brings us to the final, so-called closed-form result:

$$\beta=(\Phi^T\Phi)^{-1}\Phi^T y= \frac{1}{N\sum x_i^2-(\sum x_i)^2} \pmatrix{ \sum x_i^2\sum y_i-\sum x_i\sum x_iy_i \\ -\sum x_i\sum y_i + N\sum x_iy_i& }\,, $$

which is also exact, but remains so even without the computer. These formulas are used by most people as coming from the theory black box. Now you know where this comes from.

Like we did for interpolation, let us now progress from the linear case to polynomials. For interpolation, we had the strong result that a polynomial of order $N-1$ could fit $N$ data points exactly. There is a stronger result for fitting with polynomials, known as the Weierstrass Approximation Theorem, which states that every continuous function defined on a closed interval can be uniformly approximated as closely as desired by a polynomial function. This shows again how fitting overcomes interpolation, which breaks down in the hand of Runge's phenomenon. Weierstrass shows, on the other hand, that polynomials are good enough to get arbitrarily close, as long as we do not demand them to be exact on particular values.

The result also follows from the general theory, in which case:

$$f(x;\beta)\equiv\sum_{k=0}^N\beta_k x^k$$

and our $\Phi$ matrix (which by the way goes by the name of a Gramian) reads:

$$\Phi=\pmatrix{ 1 & x_1 & x_1^2 & x_1^3 & \dots \\ 1 & x_2 & x_2^2 & x_2^3& \dots \\ \vdots & & & & \vdots \\ 1 & x_N & x_N^2 & x_N^3 & \dots }$$

Namely, for quadratic fitting, this reads:

$$\Phi=\pmatrix{ 1 & x_1 & x_1^2 \\ 1 & x_2 & x_2^2 \\ &\vdots & \\ 1 & x_N & x_N^2 }$$

so that

$$\vec\beta =(\Phi^\dagger\Phi)^{-1}\Phi^\dagger\vec y=\pmatrix{ N & \sum_{i=1}^N x_i & \sum_{i=1}^N x_i^2 \\ \sum_{i=1}^N x_i & \sum_{i=1}^N x_i^2 & \sum_{i=1}^N x_i^3 \\ \sum_{i=1}^N x_i^2 & \sum_{i=1}^N x_i^3 & \sum_{i=1}^N x_i^4 }^{-1}\pmatrix{\sum_{i=1}^N y_i \\ \sum_{i=1}^N y_i x_i \\ \sum_{i=1}^N y_i x_i^2} $$

which, being $3\times3$, can be inverted formally, to produce exact results. It is more convenient, however, to have the computation performed numerically. Let us turn to the computer, to best interpolate quadratically, say:

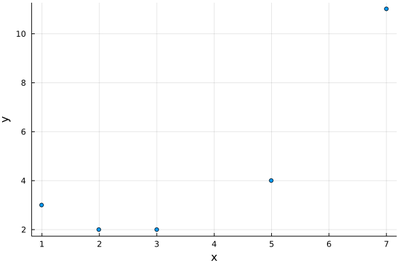

lx=[1, 2, 3, 5, 7]; ly=[3, 2, 2, 4, 11] scatter(lx, ly, legend=false, xlabel="x", ylabel="y")

It looks reasonable that one will get a good quadratic fit to such data (and clearly not with a mere linear fit). We provide directly $\Phi^\dagger\Phi$:

ΦdΦ=[length(lx) sum(lx) sum(lx.^2); 0 sum(lx.^2) sum(lx.^3); 0 0 sum(lx.^4)] ΦdΦ[2,1]=ΦdΦ[1,2]; ΦdΦ[3,1]=ΦdΦ[1,3]; ΦdΦ[3,2]=ΦdΦ[2,3];

we now have our matrix:

ΦdΦ 3×3 Array{Int64,2}: 5 18 88 18 88 504 88 504 3124

and its numerical inverse:

inv(ΦdΦ) 3×3 Array{Float64,2}: 2.78465 -1.58316 0.176972 -1.58316 1.04957 -0.124733 0.176972 -0.124733 0.0154584

and thus the best-fitting parameters:

beta=inv(ΦdΦ)*[sum(ly), sum(ly.*lx), sum(ly.*(lx.^2))] 3-element Array{Float64,1}: 5.33262260127934 -2.6982942430703787 0.49893390191898135

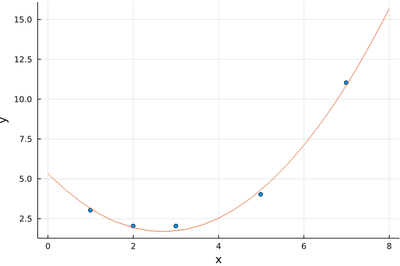

which provide the best quadratic fit:

plot!(x->beta[1]+beta[2]*x+beta[3]*x^2, 0, 8)

This is how close we manage to get:

(x->beta[1]+beta[2]*x+beta[3]*x^2).(lx) 5-element Array{Float64,1}: 3.1332622601279425 1.9317697228145079 1.728144989339036 4.31449893390198 10.892324093816775

to the sought values:

ly 5-element Array{Int64,1}: 3 2 2 4 11

with total squares of residuals:

sum(((x->beta[1]+beta[2]*x+beta[3]*x^2).(lx)-ly).^2) 0.2068230277185501

The statement is that no over parabola is such that the corresponding square distances get below this value. We have involved a numerical inverse. If we had recoursed to the exact result, we would have found the best parabola to be:

$$f(x)={2501\over469}-{2531\over938}x+{234\over469}x^2$$

with total squares of residuals $97/469$ which evaluates to the same numerical value that we have found above.

We now generalize the procedure to higher-order polynomials. This piece of code can do it for the general case:

We conclude with fitting with functions other than polynomials. In computer science, they typically provide enough generality, but in physics, many functional dependencies are of a different type. For instance, it is customary to encounter exponential behavior (which requires an infinite number of polynomials).

One can use the linear squares rigorously as long as the coefficients $\beta$ are linear. Sometimes it could appear there is a clever trick to extend its reach of action. Say we have data distributed according to (lifetimes of a radioactive sample of size $N(0)$):

$$N(t)=N(0)\exp(-\gamma t)$$

Then we could do the trick:

$$\ln(N(t))=\ln(N(0))-\gamma t$$

which is a linear function of the coefficients $(\ln N(0), \gamma$) and we could even use the analytical results above.

Tricks can be clever or they can be foolish and deceiving.

In this case, this is considered bad practice (although it could handy for a good-enough job quickly done). However, the noise is not distributed linearly, due to the nonlinear nature of the function. Therefore the regression analysis (the linear least square) will bias the data. Explore this case as an exercise by fitting nonlinearly and using the exact result above. For each time generate a cloud of data so that you can get access to the noise as well, and fit the mean as function of time. (You can also use the exprnd() function to simulate decay times.)

YYY

Clearly, seen as a line, this is not a good fit! While it is in fact excellent, as seen on the exponential fit figure, because the long time events are rare, and thus have more noise.

Nonlinear fit with Julia:

m(t, p) = p[1] * exp.(p[2] * t) p0 = [0.5, 0.5] myfit = curve_fit(m, xdata, ydata, p0)

This will return a composite typeLsqFitResult, of which we can extract:

fit.dof: degrees of freedom fit.param: best fit parameters fit.resid: vector of residuals fit.jacobian: estimated Jacobian at solution